Today, we're excited to announce H2O Wave v0.9.0, with a new wave CLI, live-reload, improved performance, background tasks and ASGI compatibility.

Among other changes, the Wave server executable wave is now called waved (as in wave daemon), and the h2o-wave Python package ships with a new CLI named wave.

No more listening

listen() has been deprecated in favor of @app. Wave apps are now one line shorter.

Old way

In versions <= v0.8.0, a skeleton app looked like this:

from h2o_wave import listen, Q

async def serve(q: Q):

pass

listen('/demo', serve)

The above app could be run like this:

(venv) $ python foo.py

New way

In versions v0.9.0+, a skeleton app looks like this:

from h2o_wave import main, app, Q

@app('/demo')

async def serve(q: Q):

pass

Notably:

listen(route)has been replaced by an@app(route)decorator on theserve()function.mainneeds to be imported into the file (but you don't have to do anything with the symbolmainother than simplyimportit).

The above app can be run using wave run, built into the new wave command line interface.

(venv) $ wave run foo

Live reload

The wave run command runs your app using live-reload, which means you can view your changes live as you code, without having to refresh your browser manually.

(venv) $ wave run foo

To run your app without live-reload, simply pass --no-reload:

(venv) $ wave run --no-reload foo

Improved performance

Both the Wave server and application communication architecture has undergone significant performance and concurrency improvements across the board. Apps should now feel lighter, quicker, and more responsive under concurrent or increased load.

The changes in v0.9 brings us closer to a v1.0 release. v1.0 will include the ability to increase the number of worker processes to scale apps, while preserving the simplicity of the Wave API.

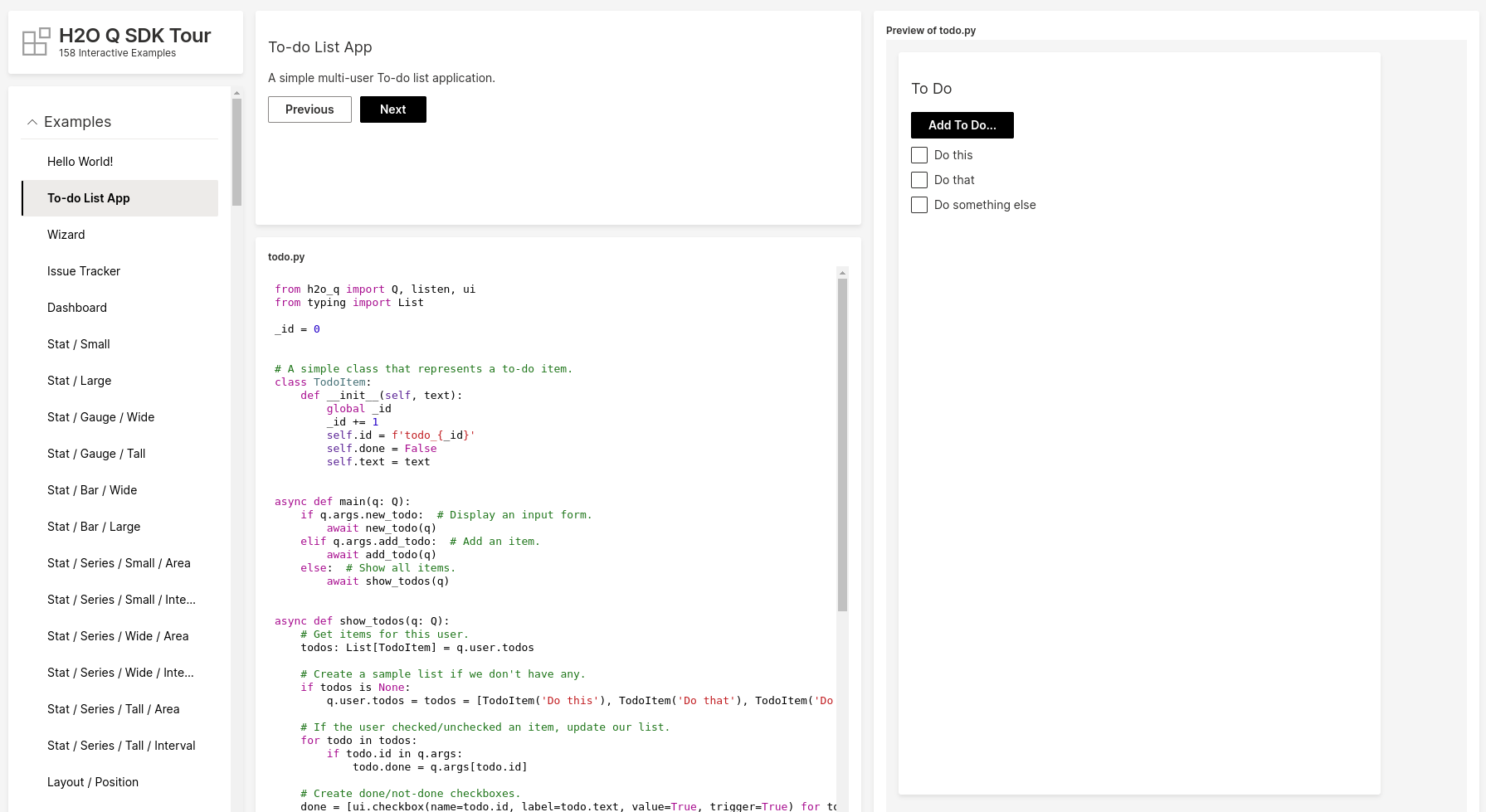

The Wave Tour (tour.py) is now quicker and more reliable:

Background tasks

The API now provides the ability to run blocking calls in the background to improve app responsiveness. The query context q now has two lightweight wrappers over asyncio.run_in_executor(): q.run() and q.exec()

Here is an example of a function that blocks:

import time

def blocking_function(seconds) -> str:

time.sleep(seconds) # Blocks!

return f'Done!'

To call the above function from an app, don't do this:

@app('/demo')

async def serve(q: Q):

# ...

message = blocking_function(42)

# ...

Instead, do this:

@app('/demo')

async def serve(q: Q):

# ...

message = await q.run(blocking_function, 42)

# ...

q.run() runs the blocking function in the background, in-process.

Depending on your use case, you might want to use a separate process pool or thread pool from Python's multiprocessing library, like this:

import concurrent.futures

@app('/demo')

async def serve(q: Q):

# ...

with concurrent.futures.ThreadPoolExecutor() as pool:

message = await q.exec(pool, blocking_function, 42)

# ...

q.exec() accepts a custom process pool or thread pool to run the blocking function.

ASGI compatibility

Wave apps are now ASGI-compatible, based on Uvicorn / Starlette, a high-performance Python server.

You can run Wave apps behind any ASGI server, like uvicorn, gunicorn, daphne, hypercorn, etc.

To run your app using an ASGI server like uvicorn, append :main to the app argument:

(venv) $ uvicorn foo:main

Download

Get the release here. Check out the release notes for more details.

We look forward to continuing our collaboration with the community and hearing your feedback as we further improve and expand the H2O Wave platform.

We’d like to thank the entire Wave team and the community for all of the contributions to this work!